| Version 2 (modified by jazz, 13 years ago) (diff) |

|---|

實作六 Lab 6

在單機模式執行 MapReduce 基本運算

Running MapReduce in local mode by Examples

Running MapReduce in local mode by Examples

以下練習,請在本機的 Hadoop4Win 環境操作。

範例一『字數統計(WordCount)』

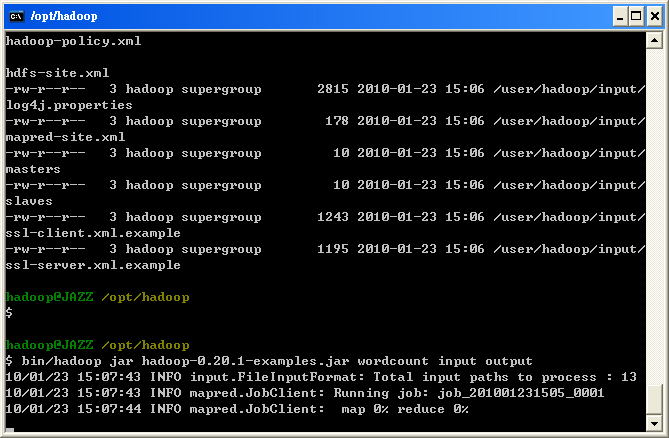

- STEP 1 : 練習 MapReduce 丟 Job 指令: 『hadoop jar <local jar file> <class name> <parameters>』

Jazz@human ~ $ cd /opt/hadoop/ Jazz@human /opt/hadoop $ hadoop jar hadoop-*-examples.jar wordcount input output 11/10/21 14:08:58 INFO input.FileInputFormat: Total input paths to process : 12 11/10/21 14:09:00 INFO mapred.JobClient: Running job: job_201110211130_0001 11/10/21 14:09:01 INFO mapred.JobClient: map 0% reduce 0% 11/10/21 14:09:31 INFO mapred.JobClient: map 16% reduce 0% 11/10/21 14:10:29 INFO mapred.JobClient: map 100% reduce 27% 11/10/21 14:10:33 INFO mapred.JobClient: map 100% reduce 100% 11/10/21 14:10:35 INFO mapred.JobClient: Job complete: job_201110211130_0001 11/10/21 14:10:35 INFO mapred.JobClient: Counters: 17 11/10/21 14:10:35 INFO mapred.JobClient: Job Counters 11/10/21 14:10:35 INFO mapred.JobClient: Launched reduce tasks=1 11/10/21 14:10:35 INFO mapred.JobClient: Launched map tasks=12 11/10/21 14:10:35 INFO mapred.JobClient: Data-local map tasks=12 11/10/21 14:10:35 INFO mapred.JobClient: FileSystemCounters 11/10/21 14:10:35 INFO mapred.JobClient: FILE_BYTES_READ=16578 11/10/21 14:10:35 INFO mapred.JobClient: HDFS_BYTES_READ=18312 11/10/21 14:10:35 INFO mapred.JobClient: FILE_BYTES_WRITTEN=32636 11/10/21 14:10:35 INFO mapred.JobClient: HDFS_BYTES_WRITTEN=10922 11/10/21 14:10:35 INFO mapred.JobClient: Map-Reduce Framework 11/10/21 14:10:35 INFO mapred.JobClient: Reduce input groups=592 11/10/21 14:10:35 INFO mapred.JobClient: Combine output records=750 11/10/21 14:10:35 INFO mapred.JobClient: Map input records=553 11/10/21 14:10:35 INFO mapred.JobClient: Reduce shuffle bytes=15674 11/10/21 14:10:35 INFO mapred.JobClient: Reduce output records=592 11/10/21 14:10:35 INFO mapred.JobClient: Spilled Records=1500 11/10/21 14:10:35 INFO mapred.JobClient: Map output bytes=24438 11/10/21 14:10:35 INFO mapred.JobClient: Combine input records=1755 11/10/21 14:10:35 INFO mapred.JobClient: Map output records=1755 11/10/21 14:10:35 INFO mapred.JobClient: Reduce input records=750

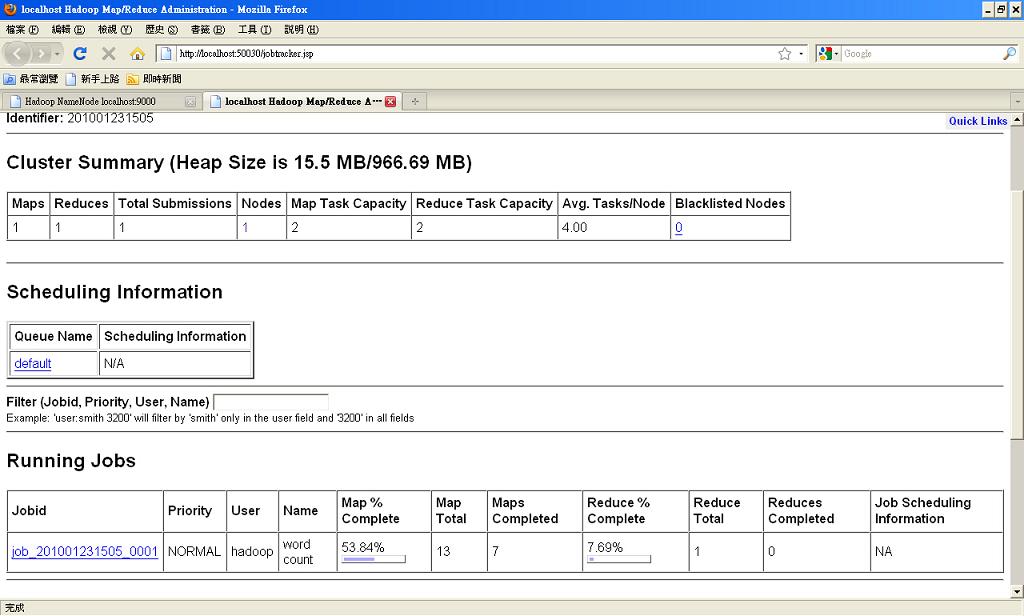

- STEP 2 : 練習從 http://localhost:50030 查看目前 MapReduce Job 的運作情形

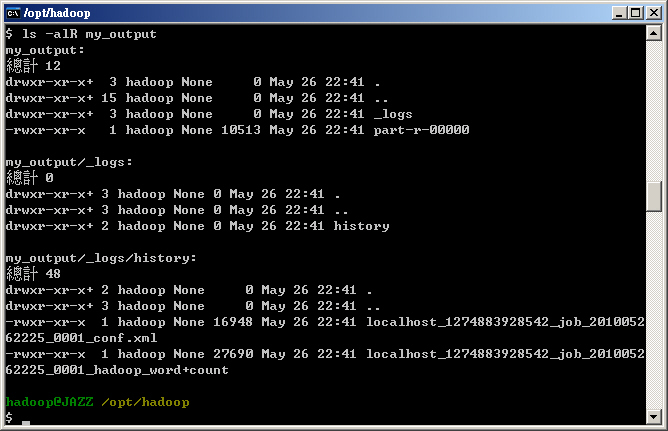

- STEP 3 : 使用 HDFS 指令: 『hadoop fs -get <HDFS file/dir> <local file/dir>』,並了解輸出檔案檔名均為 part-r-*,且執行參數會紀錄於 <HOSTNAME>_<TIME>_job_<JOBID>_0001_conf.xml,不妨可以觀察 xml 內容與 hadoop config 檔的參數關聯。

Jazz@human /opt/hadoop $ hadoop fs -get output my_output Jazz@human /opt/hadoop $ ls -alR my_output my_output: total 12 drwxr-xr-x+ 3 Jazz None 0 Oct 21 14:12 . drwxr-xr-x+ 15 Jazz None 0 Oct 21 14:12 .. drwxr-xr-x+ 3 Jazz None 0 Oct 21 14:12 _logs -rwxr-xr-x 1 Jazz None 10922 Oct 21 14:12 part-r-00000 my_output/_logs: total 0 drwxr-xr-x+ 3 Jazz None 0 Oct 21 14:12 . drwxr-xr-x+ 3 Jazz None 0 Oct 21 14:12 .. drwxr-xr-x+ 2 Jazz None 0 Oct 21 14:12 history my_output/_logs/history: total 48 drwxr-xr-x+ 2 Jazz None 0 Oct 21 14:12 . drwxr-xr-x+ 3 Jazz None 0 Oct 21 14:12 .. -rwxr-xr-x 1 Jazz None 26004 Oct 21 14:12 localhost_1319167815125_job_201110211130_0001_Jazz_word+count -rwxr-xr-x 1 Jazz None 16984 Oct 21 14:12 localhost_1319167815125_job_201110211130_0001_conf.xml

範例二『用標準表示法過濾內容 grep』

- grep 這個命令是擷取文件裡面特定的字元,在 Hadoop example 中此指令可以擷取文件中有此指定文字的字串,並作計數統計

grep is a command to extract specific characters in documents. In hadoop examples, you can use this command to extract strings match the regular expression and count for matched strings.Jazz@human /opt/hadoop $ hadoop jar hadoop-*-examples.jar grep input lab5_out1 'dfs[a-z.]+'

- 運作的畫面如下:

You should see procedure like this:Jazz@human /opt/hadoop $ hadoop jar hadoop-*-examples.jar grep input lab5_out1 'dfs[a-z.]+' 11/10/21 14:17:39 INFO mapred.FileInputFormat: Total input paths to process : 12 11/10/21 14:17:39 INFO mapred.JobClient: Running job: job_201110211130_0002 11/10/21 14:17:40 INFO mapred.JobClient: map 0% reduce 0% 11/10/21 14:17:54 INFO mapred.JobClient: map 8% reduce 0% 11/10/21 14:17:57 INFO mapred.JobClient: map 16% reduce 0% 11/10/21 14:18:03 INFO mapred.JobClient: map 33% reduce 0% 11/10/21 14:18:13 INFO mapred.JobClient: map 41% reduce 0% 11/10/21 14:18:16 INFO mapred.JobClient: map 50% reduce 11% 11/10/21 14:18:19 INFO mapred.JobClient: map 58% reduce 11% 11/10/21 14:18:23 INFO mapred.JobClient: map 66% reduce 11% 11/10/21 14:18:30 INFO mapred.JobClient: map 83% reduce 16% 11/10/21 14:18:33 INFO mapred.JobClient: map 83% reduce 22% 11/10/21 14:18:36 INFO mapred.JobClient: map 91% reduce 22% 11/10/21 14:18:39 INFO mapred.JobClient: map 100% reduce 22% 11/10/21 14:18:42 INFO mapred.JobClient: map 100% reduce 27% 11/10/21 14:18:48 INFO mapred.JobClient: map 100% reduce 30% 11/10/21 14:18:54 INFO mapred.JobClient: map 100% reduce 100% 11/10/21 14:18:56 INFO mapred.JobClient: Job complete: job_201110211130_0002 11/10/21 14:18:56 INFO mapred.JobClient: Counters: 18 11/10/21 14:18:56 INFO mapred.JobClient: Job Counters 11/10/21 14:18:56 INFO mapred.JobClient: Launched reduce tasks=1 11/10/21 14:18:56 INFO mapred.JobClient: Launched map tasks=12 11/10/21 14:18:56 INFO mapred.JobClient: Data-local map tasks=12 11/10/21 14:18:56 INFO mapred.JobClient: FileSystemCounters 11/10/21 14:18:56 INFO mapred.JobClient: FILE_BYTES_READ=888 11/10/21 14:18:56 INFO mapred.JobClient: HDFS_BYTES_READ=18312 11/10/21 14:18:56 INFO mapred.JobClient: FILE_BYTES_WRITTEN=1496 11/10/21 14:18:56 INFO mapred.JobClient: HDFS_BYTES_WRITTEN=280 11/10/21 14:18:56 INFO mapred.JobClient: Map-Reduce Framework 11/10/21 14:18:56 INFO mapred.JobClient: Reduce input groups=7 11/10/21 14:18:56 INFO mapred.JobClient: Combine output records=7 11/10/21 14:18:56 INFO mapred.JobClient: Map input records=553 11/10/21 14:18:56 INFO mapred.JobClient: Reduce shuffle bytes=224 11/10/21 14:18:56 INFO mapred.JobClient: Reduce output records=7 11/10/21 14:18:56 INFO mapred.JobClient: Spilled Records=14 11/10/21 14:18:56 INFO mapred.JobClient: Map output bytes=193 11/10/21 14:18:56 INFO mapred.JobClient: Map input bytes=18312 11/10/21 14:18:56 INFO mapred.JobClient: Combine input records=10 11/10/21 14:18:56 INFO mapred.JobClient: Map output records=10 11/10/21 14:18:56 INFO mapred.JobClient: Reduce input records=7 11/10/21 14:18:56 WARN mapred.JobClient: Use GenericOptionsParser for parsing th e arguments. Applications should implement Tool for the same. 11/10/21 14:18:57 INFO mapred.FileInputFormat: Total input paths to process : 1 11/10/21 14:18:57 INFO mapred.JobClient: Running job: job_201110211130_0003 ( ... skip ... )

- 接著查看結果

Let's check the computed result of grep from HDFS : - 這個例子是要從 input 目錄中的所有檔案中找出符合 dfs 後面接著 a-z 字母一個以上的字串

Jazz@human /opt/hadoop $ hadoop fs -ls lab5_out1 Found 2 items drwxr-xr-x - Jazz supergroup 0 2011-10-21 14:18 /user/Jazz/lab5_out1/_logs -rw-r--r-- 1 Jazz supergroup 96 2011-10-21 14:19 /user/Jazz/lab5_out1/part-00000 Jazz@human /opt/hadoop $ hadoop fs -cat lab5_out1/part-00000 3 dfs.class 2 dfs.period 1 dfs.file 1 dfs.replication 1 dfs.servers 1 dfsadmin 1 dfsmetrics.log